Companies that commit fraud give themselves away in their annual reports

Researchers from UPF and Queen Mary University of London analyse letters written by directors of large Spanish companies and find that those that commit fraud tend to use more adjectives and have a more negative tone than those that do not.

“A liar is sooner caught than a cripple”. This quite well-known and often used saying in Spain is usually cited when the lie or the person who has lied is found out. Well, researchers from the UPF Department of Translation and Language Sciences ( DTCL ) and at Queen Mary University of London School of Economics and Finance have done something similar. The results have been published in the indexed journal Information.

“In the same way that gestures, tics, reflexes or attitudes betray us when we tell a lie, what we say or write can also give us away”, says Núria Bel, a researcher at the UPF Institute of Applied Linguistics (IULA) and professor of the DCTL, author of the study.

“The basis of our study comprised the letters by the directors to the shareholders of the company that are published in the annual reports. We identified letters from companies that had been convicted of accounting fraud or that had incurred financial misconduct during the period 2011-2018”

The researchers analysed texts written by the directors of large Spanish companies. “The basis of our study comprised the letters by the directors to the shareholders of the company that are published in the annual reports. We identified letters from companies that had been convicted of accounting fraud or that had incurred financial misconduct during the period 2011-2018”, professor Bel explains.

Using machine learning and sentiment analysis (SA) methods, they evaluated the subjective information of the text, such as polarity, that is, if the text used positive (‘fantastic’), negative (‘difficult’) or neutral (‘weekly’) wording, and other manifestations of the writer’s emotions expressed in the texts. Sentiment analysis techniques enable inducing information about the opinion of the writer on the subject written, for example, in large numbers of tweets to analyse public opinion about brands, politicians, etc.

They collected the annual financial reports of large companies, presenting the previous year’s financial results and specifically analysed the letter from the CEO of the company which offers a summary of the year’s activities and sets out the future plans for the company. The main information media in Spain and court decisions between 2011 and 2018 were also analysed to identify companies that were sanctioned for accounting fraud or data misrepresentation during that period.

The analysis used 95 texts from 17 companies sanctioned for accounting fraud and 13 non-fraudulent companies. One of the problems with the study was the amount of data available, which cannot be large enough to warrant using other more powerful learning methods, such as neural networks that learn from millions of documents.

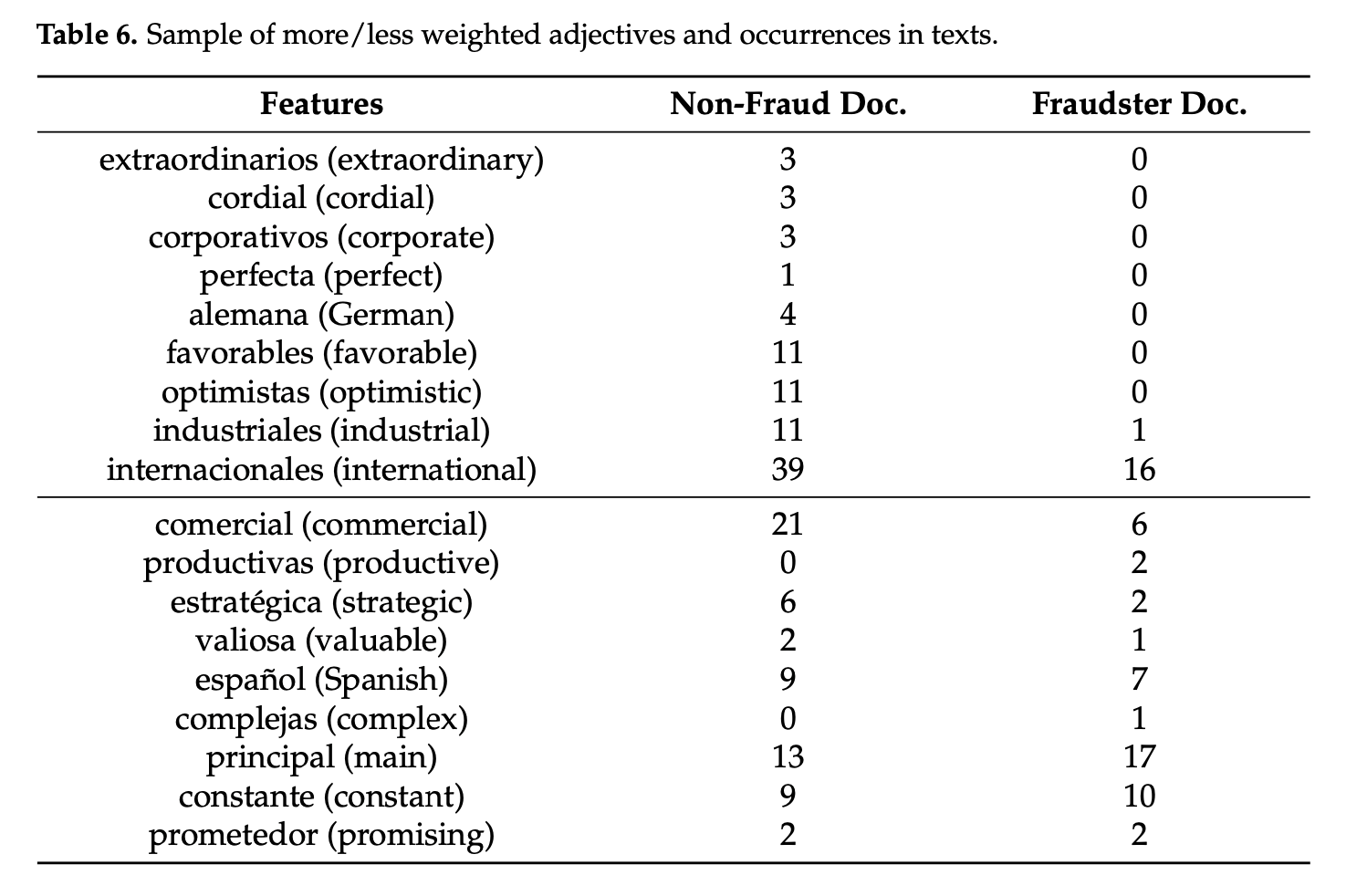

In order for the system to learn whether or not the text is fraudulent, presence in and absence from a list of more than 600 selected words was used. For example, in Table 1 there is a list of adjectives that the system learned that have more weight when it comes to inducing whether a text is from a fraudulent company or not. Words such as “favourable” and “optimistic” appear 11 times in the non-fraudulent texts, but not once in the fraudulent ones studied.

“In the literature, deceptive texts had been documented as tending to be less positive than those that are truthful, their tone is more negative. In other words, it was known that the choice of expressions such as “this is not easy” and “this is difficult” occurs unconsciously and affects the general tone of the message. They would look the same, but they are not”, professor Bel continues.

“Thanks to sentiment analysis methods with machine learning, these texts can be classified with great precision, 84%, that is, the system would fail to identify 2 texts out of ten as being fraudulent”

In previous studies, these analyses were conducted on spontaneous texts, such as a conference, emails or publications on social networks. But in this case we were dealing with texts often edited or reviewed by several people. “So it was worth wondering whether pointers could be found in these edited texts, that is, if the use of a negative tone was kept in fraudulent texts and the study says it is”, she continues. “Thanks to sentiment analysis methods with machine learning, these texts can be classified with great precision, 84%, that is, the system would fail to identify 2 texts out of ten as being fraudulent”.

We cannot speak of an application to discover who is lying, as the saying suggests, but it does allow us to know that the classifier can serve as an initial filter to reduce the volume of texts to be studied, for example. The more letters used, the more the mechanism will learn, which will be done at a later stage of the investigation.

Work reference:

Bel,N.; Bracons,G.; Anderberg, S. Finding Evidence of Fraudster Companys in the CEO's Letter to Shareholders with Sentiment Analysis. Information 2021, 12, 307. https://doi.org/10.3390/info12080307