Web tool to design virtual agents behaviours

Web tool to design virtual agents behaviours

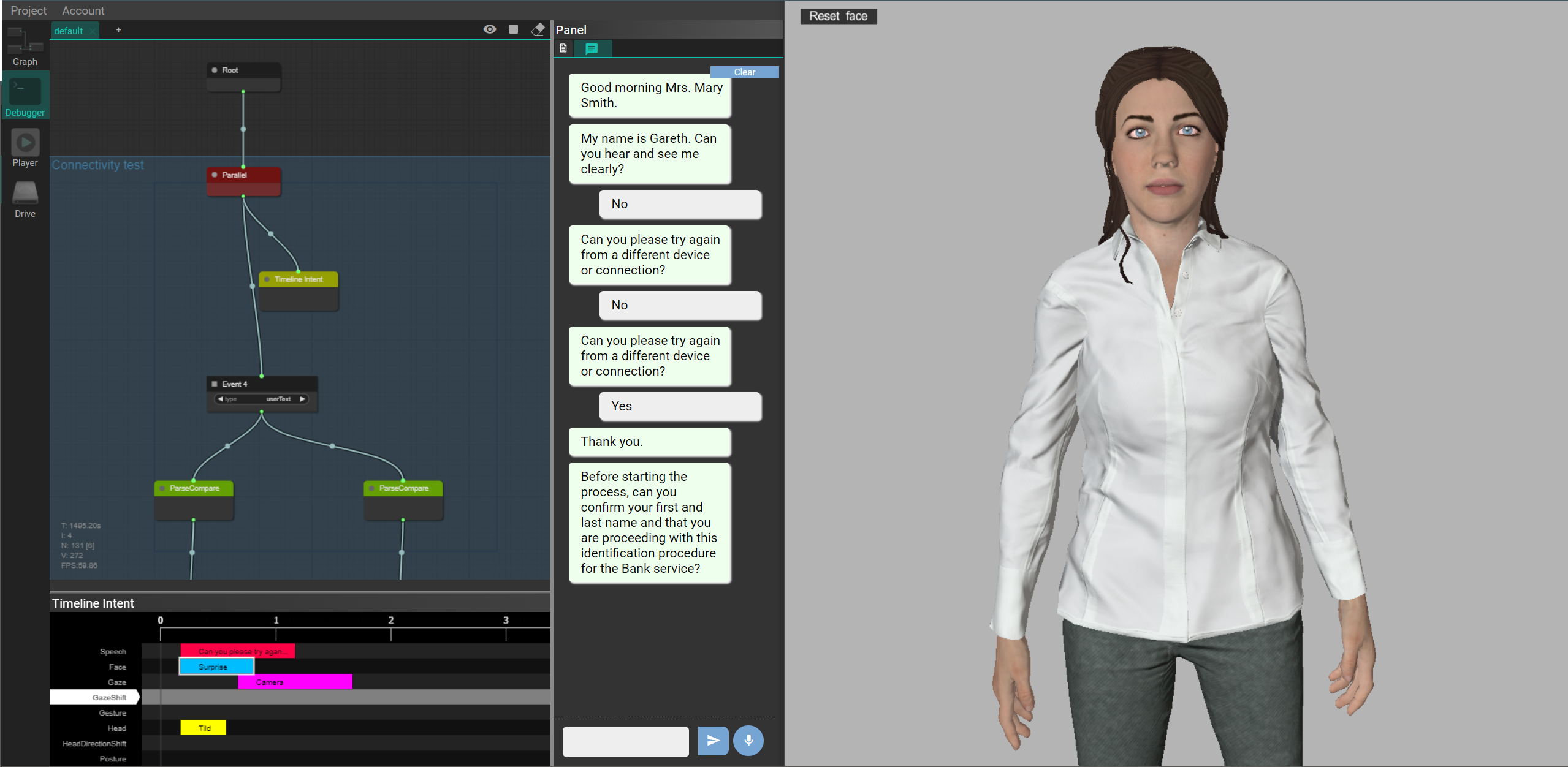

Within the context of PRESENT, UPF-GTI has been working on the development of a web application that allows to design embodied conversational agents (ECA) that interact with humans in specific scenarios. To achieve this, an approach based on behaviour/decision trees has been implemented. The implementation is built on top of the previous work in the SAUCE project, where decision trees are used to decide the behaviour of an agent. In PRESENT, the trees are presented as a graph in a web editor to provide a visual way to design complex behaviours. This web editor allows to create new behaviour trees and test them with an ECA using the build in chat (that simulates user input speech) and a provided scene created using our 3D web editor, WebGLStudio.

The range of possibilities when it comes to generating these behaviours is extensive. From performing simple actions to complex behaviours that include: text/speech, gaze and facial expressions at specific moments in the action, gestures, body animation or relevant information for the server to follow a particular process. Of course, this can be extended along the project to adapt to new requirements. Timeline nodes allow to create this type of complex behaviours, where the designer can specify when every single action has to be performed by the ECA, depending on the input of the human user and the contextual information. These nodes have a graphical timeline editor to design the complex actions mentioned before.

In collaboration with the rest of the partners, this application is part of a larger system. Despite the fact that UPF-GTI offers the possibility to visualize fastly in the web editor the interaction with the ECA, what has been also implemented (and will be constantly improved) is a system to receive contextual information of the scenario and the user (human), interpret it and output the corresponding behaviour to other partners with stronger and more powerful rendering and animation knowledge or capabilities. This is achieved thanks to the development of a websocket system, which allows such communication.