Framestore

Highly realistic agents

The sentient agent is a complex system integrating components from the different partners with off-the-shelf components. The goal is not to build each individual module but to allow the integration of the best toolsets (e.g. Google Dialogflow) and only develop of modules where adequate toolkits do not exist. FS will lead the definition of a modular architecture to ensure that all components can work well with each other, addressing specifically the requirements to achieve the POCs along the different milestones. FS will work with the partners to agree a set of uniform design principles to guide the individual development efforts. Much like the original Unix operating system, we will define a set of methods, procedures and interface standards that ensure that the system components are both independent, so that they can be replaced, open so that they may be enhanced and transparent so that they are visible to interested parties, leading to an open platform for the development of agents.

There is a general flow of data from the human input capture through the processing of the emotional and knowledge-based content toward an appropriate agent response. The System Architecture will be designed to support multiple use cases where the requirements of one may be orthogonal to those of another. The goal of a photo-real, real-time human was specifically chosen as the most challenging target. In another scenario, those deploying the technology may be willing to trade off one aspect of visual quality to focus the rendering budget on interactivity in a highly complex lighting environment. Hardware choices at runtime will also have an effect. By keeping this idea in mind from the onset, it will be possible to re-compose the various system modules according to the requirements of the end user application. This will also create opportunities for third party companies to build and extend the system based on their own requirements.

Ausgburg

High degree of emotional sensitivity

The goal of this task is to provide virtual agents with the necessary non-verbal behaviour to be positively perceived as a sentient being during interaction with the user. Several aspects influence the observed quality of human- human interactions in terms of engagement, pleasantness or rapport. Interpersonal behaviour such as synchronicity in body language, backchannel feedback, behavioural mirroring or the generation of shared attention are interpersonal behaviour traits that the virtual agent needs to show in order to be able to create emotional contingency and bonding with a human user. UAu has focused previous work on the automatic recognition of interaction quality between human users in the form of multi-person Bayesian networks, which observe if mentioned non-verbal cues are present within interaction scenarios. In the PRESENT project, UAu will use this prior knowledge and models to predict appropriate agent behaviour from multi-modal observation of the human user: Based on known causal relations between non-verbal behaviour and interaction quality we will create a prediction model to elicit correct interpersonal behaviour and feedback events as input for the agent engine.

Inria

Reactive character animation system

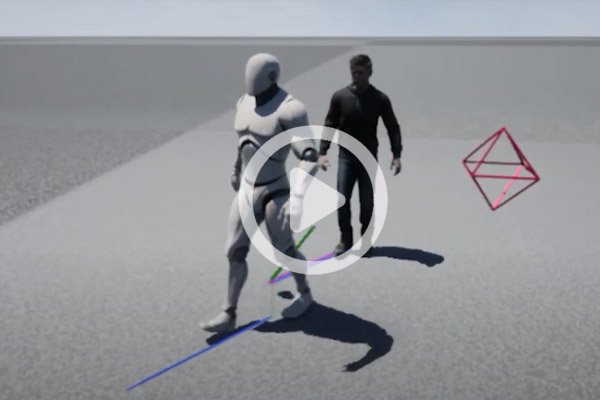

Led by Inria, PRESENT has investigated situations involving 1-to1 and 1-to-n interactions between a user and virtual characters for interactive applications and virtual reality.Human-agent interactions require the generation of expressive motions and emotion that, agents will be able to perform, those will form the bases for non-verbal communication (NVC) capabilities, such as expressing: annoyance, impatience, anger, fear, etc. The synthesis of such motions and emotions depends on multiple factors like the triggering source, the position of the user, the location of an event in the environment, or the state of the neighbouring agents to simulate emotion propagation phenomena.

PRESENT provides a reactive character animation system that controls the motion of characters to render the desired reaction in terms of motion. Inria has also explored motion capture data edition techniques that offer a good trade-off between the naturalness of motion and performance. However, pre-recorded motions are not sufficient since the reactive motions are depending on the features (location in the environment, level, etc.) of the user actions or events that triggered them.

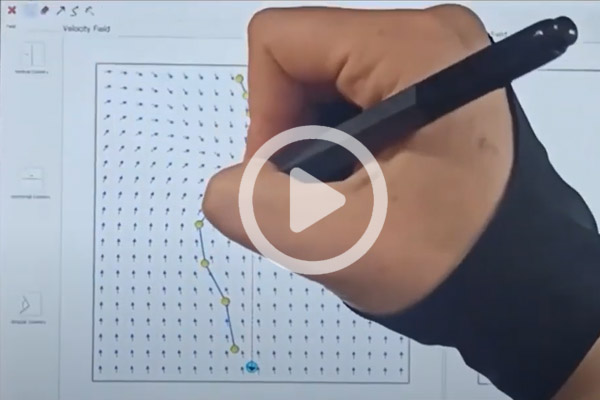

For this reason, Inria technologies allow the generation of plausible motion variations, controlling the global positioning of virtual agents and tuning their reactions and expressions Indeed, two main tools were developed: Interaction Fields that allows users to easily sketch collective behaviors, and Expressive Filter that edits the motion of a virtual character to adapt them to the field of view of an observer.

In addition to motion, we have also explored non-verbal sensory channels to study the way humans perceive virtual characters. In particular, we focus on three aspects: contact, gaze, and proxemics. We explore situations, in virtual reality, in which a user interacts with virtual agents: rendering physical contact with haptic devices, simulating gaze behaviors, and evaluating how motions and appearance affect the way humans approach virtual characters.

InfoCert

High degree of security and trust in the identity of the users

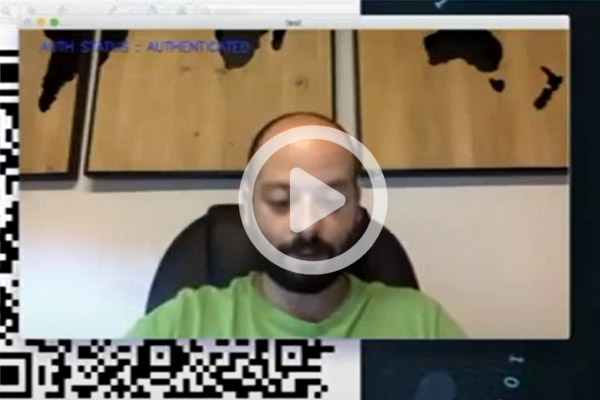

PRESENT offers a high degree of security and trust by means of its security module. The PRESENT project offers services to human users through virtual agents. Users have digital identities that can be used for authentication. The digital identity is a picture of the user and is cryptographically signed by a given entity. The digital identity is acquired in a registration phase and is stored in a so-called (digital) wallets. Several types of security levels are possible for the registration phase, depending on how strong the authentication needs to be. To make the system self-contained, we use “self-attested” identities, in which the user themselves can create digital identities. Of course, a in a real-world application it will be necessary to use third parties, e.g. Certification Authorities, that create the digital identities.

Following the Self-Sovereign paradigm, the digital identity of the user is kept in a private wallet.

The authentication services offered by the security module can work in a single-check mode or in a continuous mode: these two modes have been designed to handle the envisioned use cases. In the single-check mode, the authentication check is made only once. In the continuous mode, after the initial authentication, which is the same as the single-check authentication, the PRESENT virtual agents can continuously make authentication requests to keep checking the identity of the human user currently interacting with the virtual agent.

The digital identity used for the authentication is the binding of a biometric feature, namely the face of the user, with the real name of the user. The wallet contains a verifiable credential with the face of the user. Such a picture is compared with the pictures, taken by the virtual agent, of the person currently interacting with the virtual agent. In the continuous authentication mode, the virtual agent can keep taking pictures anytime, to repeat the check, for example at fixed time intervals.

The first authentication, the one performed in the single-check authentication, involves access to the wallet of the user. This access has to be approved by the user: the app, that manages the wallet, asks permission to access the data (the data is protected by a password that the user needs to type). Such an access will not be requested for the subsequent checks in the continuous mode.

Brainstorm

High-end professional and consumer level services

Using Branstorm’s powerful InfinitySet, PRESENT provides a way to integrate virtual characters seamlessly with real scenes and scenarios.

- The Brainstorm Unreal Player. This application enables the Unreal engine renderer at InfinitySet request, along with all the required plug-ins to interface InfinitySet and with the required scenario and selected virtual agents. New features have been added to this application like multi-render to enable switching cameras, allow discovery of scenes and blueprint parameters and management, loading new plug-ins in execution time, or implementing the protocol to control the InfinitySet from the virtual agent module, and vice versa.

- InfinitySet adaptation to UE. This adaptation receives UE renders through shared memory and in real time, not only the colour channels but also the alpha channel and the depth channel in order to better integrate Unreal and Infinity engines renders and allow for occlusions and other graphic effects. It will be able to deliver video backward to UE to be used in its higher quality render, or to control any parameter in the UE scene graph, and send real-time updated 3D models to be rendered with higher quality for projection of the talent’s shadows or effects like reflections on UE geometries.

To provide a more interactive scene, InfinitySet has extended with an image analysis module able to estimate the talent head position for the virtual agent to look at him while dialoguing.

CREW

Engagement with sentient agents in a live setting

VR is an experiential medium with a certain level of agency. It implies acknowledging the presence and by extension the emotional presence of the immersant/user. ‘How is your emotional presence being read and understood? What does it generate on the other side? How to control it and how to work with it as a director?’

In VR for instance involving 1-to-n interactions between a user and multiple characters creates strong engagement, sense of immersion and embodiment. Scenarizing this through non verbal communication in social situations and the spatial environment, like e.g. behaviour in train stations produced strong results.

Delirious Departures is a VR live art performance, featuring representations of humans on a spectrum of sentience: from static sculptures over sentient agents to a live actor. Commissioned by Europalia Trains&Tracks, Delirious Departures is set in a post-pandemic world of semi-abstract railway stations and travelers. The performance premiered at the Royal Museum of Fine Art, was part of the royal Belgian state visit to Greece and was presented at the SIGGRAPH 2022 Immersive Pavillion.

Soulhacker delved deeper into the exploration of emotional ‘presence’ Soulhacker is a VR experience based on the methods and concepts of Milton Erickson. Collaborating with Brai3n’s neuropsychiatrist Dr Georges Otte, CREW created a VR application for treating depression. Empowering the user to change his environment, Soulhacker extends psychotherapy to an embodied immersive experience. The experience exists in two forms: an artistic one and a therapeutic one. The therapeutic one was deployed in clinical tests, with an upcoming peer reviewed article.

Adam is a screenbased application involving verbal communication, facial expression and emotional sensing. Adam mirrors emotions in a highly realistic and expressive fashion. CREW recontextualized a technical proof of concept to a tool that can be used for treatment of autism.

CREW has further developed and evaluated the Straptrack large area tracking system, evolving into a a proof of concept of a new system, developed by EDM University of Hasselt. Custom tracking solutions are crucial for future works by CREW.

Cubicmotion

Efficiently create new virtual agents

CubicMotion has used their expertise in creating facial animation solvers from video and research and develop solution to create a low latency, high-quality text to animation technology for a digital avatar.

The first stage is to create facial animation driven by performance to provide a benchmark for the visual quality of the digital character and to serve as training data for the facial animation engine. CM has used video cameras on a head-mounted rig to capture the fine detail of an actor's face and transfer the movements to a digital model. Research will advance the current state-of-the-art with higher fidelity capture, denser tracking and 3D acquisition to create photo-real animation to train the system. Solving this data to the character’s controls has allowed us the captured performance data to be represented as a compact, semantically meaningful set of parameters. This facilitates the application of an emotional overlay to the animation so that an agent can convey emotions visually to a user as part of an interactive system.

UPF-GTI

Your own virtual assistant

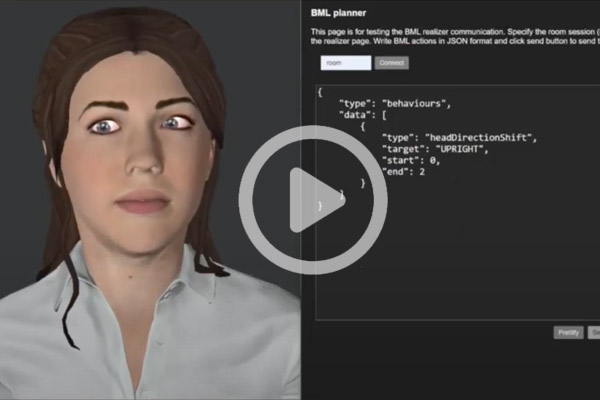

PRESENT provides virtual conversational characters that can be used in different situations. Therefore, it is necessary to plan their behaviour, both verbal and non-verbal, and decide what actions to carry out depending on how their interlocutor acts. Taking advantage of our experience in the development of virtual agents on the web, GTI has created a system that allows you to easily create your own virtual assistant. This system consists of the following independent parts:

Behaviour Planner: It is a web application that uses modified decision trees to determine the behaviour of a conversational agent. The trees are presented as a graph in a web editor to provide visual support with which to design complex behaviours using a node-based programming approach. It also can be tested with our own character. As this has been developed as a Javascript and NodeJS library, it can be included in any application that supports these languages. You only have to export the decision tree to be able to use it externally.

Behaviour Realizer: Web player that interprets and executes the behaviours generated by the Behaviour Planner (or other applications). The actions are organized in time through temporal marks and can include verbal and non-verbal behaviours, following the BML instructions. It also can be used in combination with a sensory system that can integrate inputs and show the appropriate emotional reactions of the character. We have not only researched in the field of procedural animations, but also in web rendering, implementing techniques for visual enhancement of skin, eyes and hair.

Thus, this system has allowed us to create a virtual clerk at our university that can provide information about where a person, a research group or a place on campus is. As well as testing other use cases more quickly in a web context.

Photoreal REaltime Sentient ENTity (PRESENT)

PRESENT is a three-year EU Research and Innovation project between 8 companies and research institutions to create virtual digital humans -sentient agents- that look entirely naturalistic, demonstrate emotional sensitivity, establish an engaging dialogue, add sense to the experience, and act as trustworthy guardians and guides in the interfaces for AR, VR, and more traditional forms of media.

Department of Engineering and Information and Communication Technologies

Roc Boronat building (Poblenou campus)

Roc Boronat, 138

08018 Barcelona

Project Manager:

Principal Investigators: