Research

Music creativity modeling - Expressive music performance

The aim of our research is to understand and simulate music creativity by modeling music processes and high-level music actions. We are especially interested in the development of machine learning techniques for building semantic models of different aspects of music creativity. We are interested in analyzing the semantic models in order to understand creativity and in developing intelligent music systems, such as music mining, information retrieval, and personalization systems. The lessons learnt by studying and modeling creativity in music are generalizable to other domains in which creativity plays an important role, such as design, architecture, and the arts.

As an instance of music creativity, We investigate the modeling of one of the most challenging problems in music informatics: expressive music performance. Expressive music performance studies how skilled musicians manipulate sound properties such as pitch, timing, amplitude and timbre in order to ‘express’ their interpretation of a musical piece. While these manipulations are clearly distinguishable by the listeners and often are reflected in concert attendance and recording sales, they are extremely difficult to formalize. Using machine learning techniques, we investigate the creative process of manipulating these sound properties in an attempt to understand and recreate expression in performances.

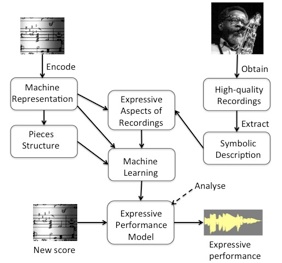

Fig.1 Research Framework for learning, analyzing and applying an expressive performance model. High-quality recordings are obtained from a professional musician (e.g. commercial recordings) and their respective music scores are encoded into a machine understandable format. Sound analysis techniques based on spectral models are applied for extracting high-level musical features which together with the score description and a structural analysis of the pieces is fed to machine learning algorithms in order to obtain a expressive performance model. The model is analyzed, possibly refined and used to generate human-like expressive performances of new (unseen) music scores. You can listen to an expressive performance automatically generated by one of our models here.

Selected Publications

- Ramirez, R., Maestre, E., Serra, X. (2012). A Rule-Based Evolutionary Approach to Music Performance Modeling, IEEE Transactions on Evolutionary Computation, 16(1): 96-107.

- Ramirez, R., Maestre, E., Serra, X. (2011). Automatic Performer Identification in Celtic Violin Audio Recordings, Journal of New Music Research, 40(2): 165–174.

- Ramirez, R., Maestre, E., Serra, X. (2010). Automatic performer identification in commercial monophonic Jazz performances, Pattern Recognition Letters, 31: 1514-1523.

- Ramirez, R., Perez, A., Kersten, S, Rizo, D., Román, P., Iñesta, J.M. (2010). Modeling Violin Performances Using Inductive Logic Programming, Intelligent Data Analysis, 14(5): 573-586.

Brain-computer Interfaces

We are also interested in the use of emotions in brain-computer interfaces. Emotions in human-computer interaction are important in order to address new user needs. We apply machine learning techniques to detect emotion from brain activity, recorded as electroencephalograph (EEG) with low-cost devices. We are interested in low-cost devices because we believe that for my research to have impact in the general public, the technologies we develop should be affordable by most people as opposed to be restricted to be used in a laboratory. We are starting to apply these technologies in order to investigate the potential benefits of combining music and neurofeedback for improving users’ health and quality of life. Specifically, we investigate the emotional reinforcement capacity of automatic music neurofeedback systems, and its effects for improving conditions such as depression, anxiety or dementia.

Fig. 2. A wireless low-cost EEG device is used for controlling in real-time the expressive content of a piece of music (timing, dynamics and articulation) based on the emotional state of a user.

Selected Publications

- Ramirez, R., Vamvakousis, Z. (2012). Detecting Emotion from EEG Signals using the Emotiv Epoc Device, Lecture Notes in Computer Science 7670, Springer.

- Ramirez, R., Giraldo, S., Vamvakousis, Z. (2013), EEG-Based Emotion Detection in Music Listening, In Proceedings of the Fifth International Brain-Computer Interface Meeting 2013. Graz University of Technology Publishing House, University of Technology Publishing House, California, USA

- Ramirez, R., Giraldo, S., Vamvakousis, Z. (2013) EEG-Based Emotion Detection In Live-Music Listening, In proc. of International Conference on Music & Emotion. Jyväskylä, Finland.

Neural Activity Prediction & Cognitive State Decoding

In this area, our research aims to build computational models able to predict the functional magnetic resonance imaging neural activation in humans produced by different auditory stimuli. We train the model with acoustic features extracted from auditory stimuli and the associated observed fMRI images. Thus, the model establishes a predictive relationship between the acoustic features extracted from the auditory stimuli and its associated neural activation. We show that the model is capable of predicting fMRI neural activity associated with the stimuli considered with accuracies far above those expected by chance. This work represents a natural progression from building catalogues of patterns of fMRI activity associated with particular auditory to constructing computational models, which predict the fMRI activity for stimuli for which fMRI data are not available yet.

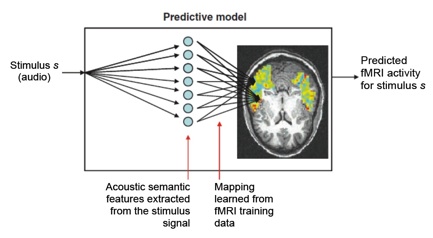

Fig. 3. fMRI activation is predicted in a two-steps: (1) encoding of stimulus signal s as a set of acoustic features, and (2) prediction of the activation of each voxel in the fMRI image as a function of the acoustic features.

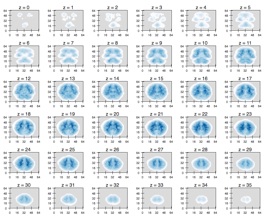

Fig. 4. The rendering shows voxel average prediction accuracy over many participants. Darker blue indicates higher accuracy.

We are also interested in designing and applying machine learning algorithms for both feature selection and classifier training to the problem of discriminating instantaneous cognitive states produced by different auditory stimuli. This problem is also interesting from the machine learning point of view since it provides an interesting case study of training classifiers with extremely high dimensional (40,000-60,000 features), sparse (30-80 examples) and noisy data. We associate a class with each of the cognitive states of interest and given a subject’s instantaneous fMRI data observed, we train classifiers for predicting one of the classes. We train the classifiers by providing examples consisting of fMRI observations along with the known cognitive state of the subject.

Selected Publications

- Ramirez, R., Maestre, E., Gomez, I. (submitted). Human Brain Activity Prediction for Rhythm Perception Tasks: an fMRI study. NeuroImage.

- Gómez, I., Ramirez, R. (2011). A Data Sonification Approach to Cognitive State Identification, International Conference on Auditory Display, Budapest, Hungary.

- Ramirez, R., Puiggros, M. (2007). A Machine Learning Approach to Detecting Instantaneous Cognitive States from fMRI Data, Lecture Notes in Computer Science 4426, Springer.

- Ramirez, R., Puiggros, M. (2007). A Genetic Programming Approach to Feature Selection and Classification of Instantaneous Cognitive States, Lecture Notes in Computer Science 4448, Springer