Objectives

O1: Recording and annotation of a fetal surgeries’ dataset in 3D+t

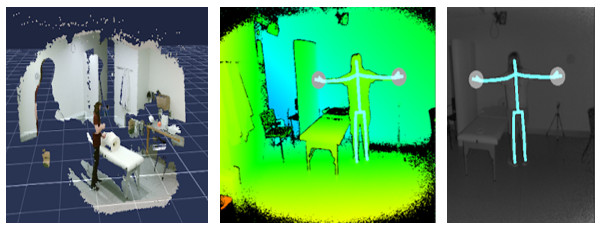

The goal is to record live TTTS surgeries as a cloud point in time using RGB cameras and state of the art deep learning networks to annotated and segment object of interests and main events. Those recorder data will form the basic training dataset for our application.

O2: Augmented reality interface for training and simulations of fetal surgeries

Our goal is to develop an user interface in mixed reality that feels natural and comfortable for the surgeon to explore the anatomy of the patients and the steps involved in performing a real TTTS surgery such as US exploration of the patients, decision of the entry point, fetoscope navigation using only the video feed and photocoagulation of the anastomoses.

O3: Synthetic generation of realistic ultrasound and fetoscopy video

In order to create a useful environment for the surgeon to train into, we will ensure that the texture of all the objects she interacts with is as realistic as possible and that the US and video feed simulated by our systems are comparable to what the surgeons would see in a real operation.

O4: 3D printed phantom for realistic interaction

We will explore the impact of using a realistic phantom together with the augmented reality interface to capture the feeling of manipulating the fetoscope in a real environment.