RMC-MIL for weakly-supervised facial behavior analysis

RMC-MIL for weakly-supervised facial behavior analysis

RMC-MIL for weakly-supervised facial behavior analysis

Regularized Multi-Concept MIL for weakly-supervised facial behavior categorization

Adrià Ruiz, Joost Van de Weijer and Xavier Binefa

Regularized Multi-Concept MIL for weakly-supervised facial behavior categorization

Adrià Ruiz, Joost Van de Weijer and Xavier Binefa

Abstract

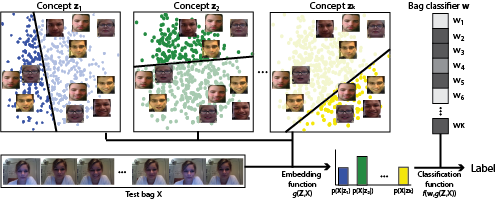

We address the problem of estimating high-level semantic labels for videos of recorded people by means of analysing their facial expressions. This problem, to which we refer as facial behavior categorization, is a weakly-supervised learning problem where we do not have access to frame-by-frame facial gesture annotations but only weak-labels at the video level are available. Therefore, the goal is to learn a set of discriminative expressions and how they determine the video weak-labels. Facial behavior categorization can be posed as a Multi-Instance-Learning (MIL) problem and we propose a novel MIL method called Regularized Multi-Concept MIL to solve it. In contrast to previous approaches applied in facial behavior analysis, RMC-MIL follows a Multi-Concept assumption which allows different facial expressions (concepts) to contribute differently to the video-label. Moreover, to handle with the high-dimensional nature of facial-descriptors, RMC-MIL uses a discriminative approach to model the concepts and structured sparsity regularization to discard non-informative features. RMC-MIL is posed as a convex-constrained optimization problem where all the parameters are jointly learned using the Projected-Quasi-Newton method. In our experiments, we use two public data-sets to show the advantages of the Regularized Multi-Concept approach and its improvement compared to existing MIL methods. RMC-MIL outperforms state-of-the-art results in the UNBC data-set for pain detection.

Overview RMC-MIL

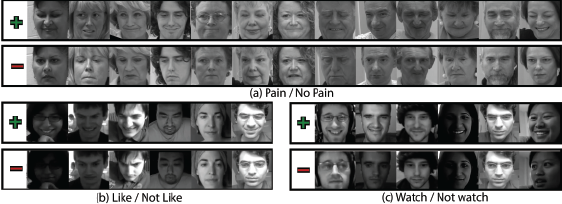

Qualitative Results